So what is MapReduce?

Well besides being the most wonderful model I've come across so far, it is a program that comprises of a Map() function and a Reduce() function. The Mapper filters and sorts and sends key value pairs to a shuffler and sorter. The shuffler and sorter pairs common keys together and then sends it to the Reducer that summaries the data.

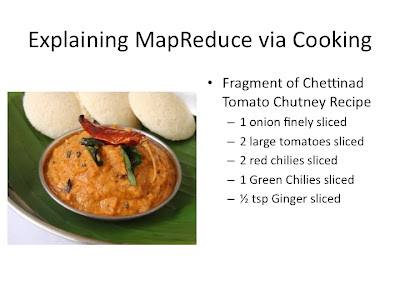

MapReduce Via Cooking

Lets start by making my favorite South Indian Chutney; Chettinad Tomato Chutney. Please note that the recipe below is just a fragment of the entire recipe. The picture and full recipe is locate at this link if you would like to try it.

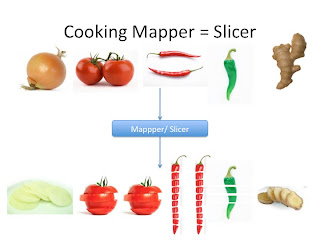

First we would have to slice all the veggies. As shown below. This is similar to the mapper function in MapReduce. The human slicer will throw out all bad veggies and slice all fresh veggies. The key would be the veggie name and the value would be individual slices.

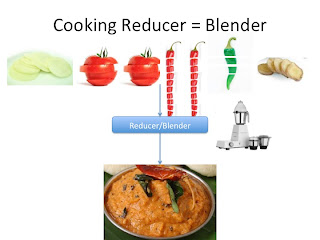

This would be sent to the Shuffler and sorter but we will come back to that. Now just imagine it is sent directly to the Reducer or Blender. The blender will mush everything into one tomato chutney dish. Looks good right?

Now imagine you own a South Indian Restaurant. This restaurant is extremely successful and your famous Chettinad Tomato Chutney is a top seller. Every day you sell at least 150 orders of it.

How would you accomplish this task?

First you will need a large supply of onions, tomatoes, red chilies, green chilies, and ginger

Next you will need to hire a bunch of human slicers

Then you will have to buy many blenders

You will also have to Shuffle and sort sliced similar veggies together from different slicers

Then blend appropriate quantities of each veggie together.

This is MapReduce! Processing a lot of things in parallel.

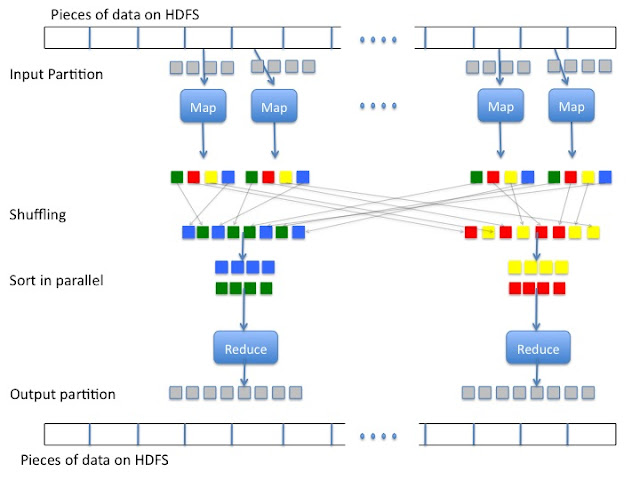

Below you will find an outline of Hadoop and MapReduce. Hadoop adds to MapReduce a file saving system called Hadoop Distributed File System (HDFS). I will not talk about HDFS any further here. The data is stored on HDFS and then partitioned into smaller pieces and sent to the map functions on multiple cores or computers. Map sends key value pairs to the shuffler. The shuffler will send specific keys to a specific sorter. The sorter will pair up all keys and append all values to the same key and send it to the reducer where the data is combined together and summarized. The data is then stored on HDFS.